This year the major conference of our field has been held in Waltham, USA, in the Boston area.

I could only be there for three days, 2 days of 3DUI and 1 day of IEEE VR, and as I was chairing the 3DUI contest I couldn’t attend to as much conferences as I would have liked to.

So I’ll first talk about an interesting topic that was widely discussed there and later try to talk about the rest.

Perceptive Illusions

I don’t know if it’s a real trend or if it’s juste because I’m getting more and more interested in that, but I believe there are more and more papers about perceptive illusions; knowing the limits of our perception and taking advantage of these limitations to overcome current limitations of VR systems.

Check out the PIVE workshop website and its proceedings.

These illusions mostly use our visual system to distract other senses. I have already talked about Redirected Walking, which don’t exactly match the real user’s position and orientation to the virtual one so that he can walk virtually in a greater area than the real one.

Now two papers use change blindness : Evan A. Suma (“Exploiting Change Blindness to Expand Walkable Space in a Virtual Environment”) and Frank Steinicke (“Change Blindness Phenomena for Stereoscopic Projection Systems”) :

Steinicke : (…) modifications to certain objects can literally go unnoticed when the visual attention is not focused on them. Change blindness denotes the inability of the human eye to detect modifications of the scene that are rather obvious–once they have been identified. These scene changes can be of various types and magnitudes, for example, prominent objects could appear and disappear, change color, or shift position by a few degrees. (…) Such change blindness effects have great potential for virtual re- ality (VR) environments, since they allow abrupt changes of the visual scene which are unnoticeable for users. Current research on human perception in virtual environments (VEs) focuses on identifying just-noticeable differences and detection thresholds that al- low the gradual introduction of imperceptible changes to a visual scene. Both of these approaches–abrupt changes and gradual changes–exploit limitations of the visual system in order to introduce significant changes to a virtual scene. (…) These change blindness studies have led to the con- clusion that the internal representation of the visual field is much more sparse than the subjective experience of “seeing†suggests and essentially contains only information about objects that are of interest to the observer

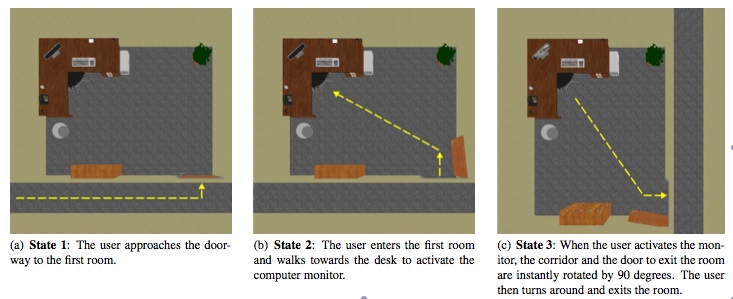

Suma : Since usually the architecture of an environment does not suddenly change in the real world, these assumptions may carry over into the virtual world. Thus, subtle changes to the scene that occur outside of the user’s field of view may go unnoticed, and can be exploited to redirect the user’s walking path. Figure 1 shows an example modification where the doorway to exit a room is rotated, causing users to walk down the virtual hallway in a different direction than when they first entered the room. These “doorway switches†can be used to allow the user to explore an environment much larger than the physical workspace.

Another paper by Tabitha Peck (“Improved Redirection with Distractors: A Large-Scale-Real-Walking Locomotion Interface and its Effect on Navigation in Virtual Environments”) allows you to walk naturally :

We designed and built Improved Redirection with Distractors (IRD), a locomotion interface that enables users to really walk in larger-than-tracked-space VEs. Our locomotion interface imper- ceptibly rotates the VE around the user, as in Redirected Walking [15], while eliminating the need for predefined waypoints by using distractors (…)

The scene is always slowly rotating so the user is always more or less walking towards the center of the real room. If the user is too close to a real wall, the system will create a distracting object, like a butterfly, so that the user looks at it. While he is following the distractor with its head, the scene is also rotated so that when the user looks back at the original position, he is facing away from the wall.

We rotate the scene based on head turn rate because user perception of rotation is most inaccurate during head turns.

This paper by Maud Marchal (“Walking Up and Down in Immersive Virtual Worlds: Novel Interactive Techniques Based on Visual Feedback”) also modifies the view of the user while walking to give him the sensation of going up or down :

We introduce novel interactive techniques to simulate the sensation of walking up and down in immersive virtual worlds based on vi- sual feedback. Our method consists in modifying the motion of the virtual subjective camera while the user is really walking in an immersive virtual environment. The modification of the virtual viewpoint is a function of the variations in the height of the virtual ground.

This is very similar to the pseudo-haptics applications by Anatole Lecuyer. Maybe it’s because they work in the same team at Inria 😉

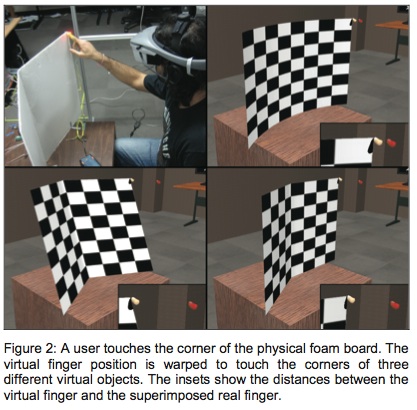

Speaking of pseudo-haptics, the best poster went to Luv Kohli for “Redirected Touching: Warping Space to Remap Passive Haptics” :

This poster explores the possibility of mapping many differently shaped virtual objects onto one physical object by warping virtual space and exploiting the dominance of the visual system. (…) This technique causes the user’s avatar hand to move in virtual directions different from its real-world motion, such that the real and avatar hands reach the real and virtual objects simultaneously.

This means that with one real object, you can simulate the touch of multiple virtual objects. Your visual system will override your sense of touch.

You can do the same with your olfaction, as described in a paper by Aiko Nambu (“Visual-Olfactory Display Using Olfactory Sensory Map”). Creating a smell requires a lot of different basic smells. By displaying corresponding objects, you can trick your nose into thinking that the scent of a lemon is actually smelling like an orange if you dispay an orange, or the scent of peach can actually be used for strawberries.

It’s really incredible what you learn thanks to VR. We think our perception is so perfect and flawless, but in fact we have a really bad perception of our environment, but it’s good enough to survive..

I stumbled across your blog a year ago, and since then I’ve been intermittently stopping by to read your latest posts. So, it was really exciting to see my work featured here! And you’re right about perceptual illusions being a “hot topic” the past couple years, with multiple research groups actively exploring these issues.

Thank you Evan, I can’t wait to see more paper about that !

And congratulations for you PhD 😉