Two days before the real IEEE VR conference are other international events, mostly running in parallel. So choosing between one or another is really heartbreaking. The organizers managed to have only a single track for the VR conference which was really nice.

I won’t be able to really sum up what happenned during 3DUI and the SEARIS workshop (Software Engineering and Architectures for Interactive Systems, they now have a nice webpage!)Â because I couldn’t really attend much of them (you can still read last year’s 3DUI and SEARIS summaries to get an idea).

The one message I think was critical was from Chad Wingrave, stating that 3D interactions are stagnating because 3DUI is a hard and unexplored software engineering problem. So this is not only a problem of ergonomy, but also how do you create an infrastructure to support that, or how do you simply specify what and how shoud an interaction behave?

Online presentations

The good news is that much of the 3DUI presentations are now online (but not the actual papers)! This is a really nice feature of the VGTC. Maybe the SEARIS team would do the same ?

So what did I do during this time ?

On saturday, along with David, we were giving a Virtools tutorial.

On sunday morning I attended a great tutorial on how to “Conduct Human-Subject Experiments” by J. Edward Swan II, Stephen R. Ellis, Bernard D. Adelstein, Joseph L. Gabbard. This was really eye-opening ! It is really nice that this tutorial was given; I’ve never run a user study and I now have a better understanding of the complexity of designing such experiments and analyzing the results.

J. Edward Swan II

PIVE 2009

The Perceptual Illusions in Virtual Environments (PIVE) workshop on sunday afternoon was also in parallel of the 3DUI conference. The organizers (Frank Steinicke, who else?) have put the actual papers online on the PIVE website.

Here’s what this workshop is about :

Virtual environments (VEs) provide humans with synthetic worlds in which they can interact with their virtual surrounding. However, while interacting in a VE system, humans are still located in the physical setup: they move through a laboratory space or may touch real-world objects. This duality of being in the real world while receiving visual, haptic, or aural information from the virtual world places users in a unique situation, forcing them to integrate (or separate) stimuli from potentially different sources simultaneously. The fact that a person’s perception of a virtual reality environment can vary enormously from the perception of real world environments opens up a broad field of potential applications that take advantage of perceptual illusions. Such illusions arise from misinterpretation by the brain of sensory information:

- Visual illusions exploit the fact that vision usually dominates proprioceptive and vestibular senses. Based on this, redirected walking can force users to be guided on physical paths, which may vary from the paths on which they perceive they are walking in the virtual world.

- Haptic illusions may give users the impression of feeling virtual objects by touching real world props. The physical objects that represent and provide passive haptic feedback for the virtual objects may vary in size, weight, or surface from the virtual counterparts without users observing the discrepancy.

- Acoustic illusions may result in users perceive (self-)motion (such as vection) when no such visual motion is being supplied.

Frank Steinicke, Markus Lappe, Robert Lindeman, Victoria Interrante, Anatole Lécuyer

The idea is that we learn more through errors, and perceptive illusions help understand perception by introducing errors into the system.

One really interesting paper was “Travel Distance Estimation from Leaky Path Integration in Virtual and Real Environments“, by Markus Lappe and Harald Frenz, which gives a potential explanation on why travel distance estimation is under-estimated in VEs. To sum-up, when moving in a VE, we “lose” some movement information because the current hardware is not able to give realistic visuals. As we estimate the distance travelled visually by analyzing the optic flow, losing some of this information results in an underestimation of that distance.

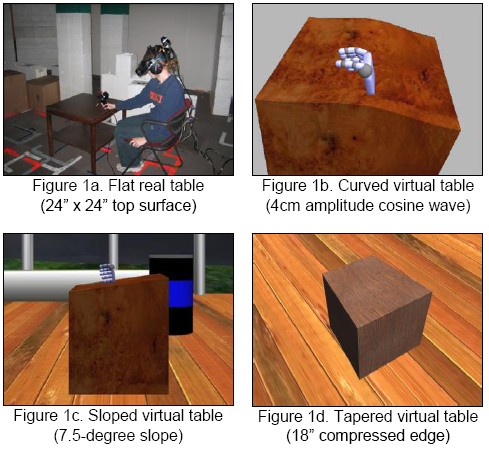

Another paper I really liked is “Exploiting Perceptual Illusions to Enhance Passive Haptics“, by Luv Kohli.

Passive haptic feedback is very compelling, but a different physical object is needed for each virtual object requiring haptic feedback. I propose to enhance passive haptics by exploiting visual dominance, enabling a single physical object to provide haptic feedback for many differently shaped virtual objects.

This means that using a simple flat real table, you should be able to simulate different surfaces like a tilted plane, or even an uneven surface, because the visual sense dominates what your hand feels !

Stay tuned for more about the actual IEEE VR conference!

1 Comment