One of the very good surprises of creating my VR Wall is that the Nvidia 3D vision driver with a GeForce doesn’t (completely) break VR cameras.

I’ve managed to have a pretty good calibration, and I’ll detail the steps here.

Some important issues remain, see at the end of the article, but the results are already very interesting !

Definitions

VR cameras compute a correct perspective when looking at a screen with your head tracked, resulting in an asymmetric frustum.

I was afraid that if I give an asymmetric frustum to the 3d Vision driver, it would go crazy, but it doesn’t !

It *seems* to compute the correct frustas for both eyes. I don’t have a formal proof that everything is correct yet, but I have several clues.

(With a Quadro you don’t have this problem since you compute the frustum for both eyes manually.)

The first clue is that the stereo “feels” good. This is not very scientific but it’s already a good start.

Then I had two problems :

– I didn’t know what the 3D Vision driver supposes the distance between my eyes is. It needs to be exactly the physical distance otherwise the objects will appear smaller or bigger than natural. Remember we’re trying to make a 1:1 mapping between the virtual and the physical world here.

– The driver also assumes a screen distance, that is the distance between your eyes and the screen. 3D objects that stand at the same distance are in the plane of the screen : the parallax (distance between the red and blue dots bellow) Â is zero, the left and right pictures for this object are at the same place. But it doesn’t know the particular distance of my setup.

( Figures from this course )

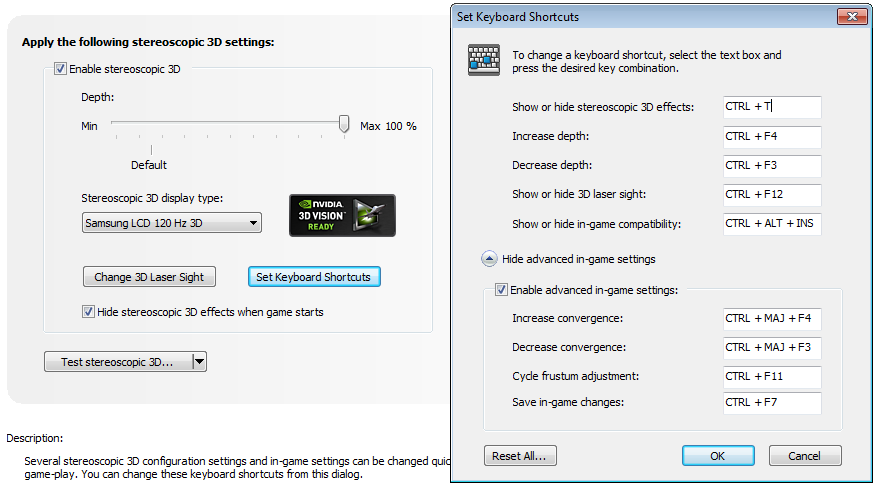

The 3D Vision driver allows you to manipulate a “Depth Amount” (probably the Inter-eye distance) but this has no metric. You can also manipulate the convergence (i.e. the screen distance), but you still don’t have a real measurable value.

( There is also a “Cycle frustum adjustment” function but I have no idea what it does appart from stretching the frustum!)

Calibrating the convergence

Please note that this is a very empirical method, so if you see any flaw please let me know !

The easiest thing to check is the Convergence, ie the distance between my eyes and the screen. As we said, if an object is at the convergence distance, both left and right pictures for this object will be at the same place.

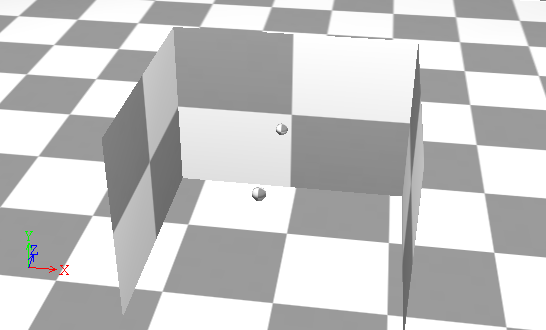

So in Virtools I created a 3D scene to test that :

The box has the same width and height as the screen, and the front of the box exactly fits my projected screen.

The first ball (lowest one), is exactly at the convergence distance, so if the convergence distance is set right in the 3D Vision drivers, there shouldn’t be any parallax.

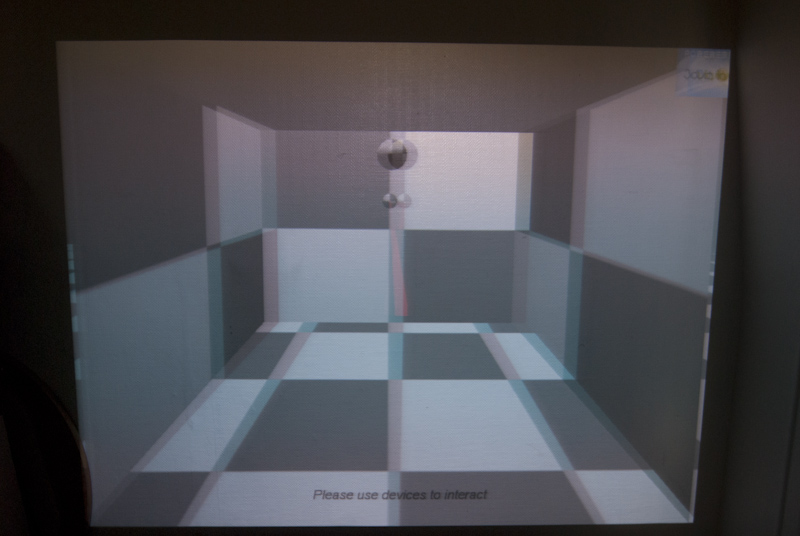

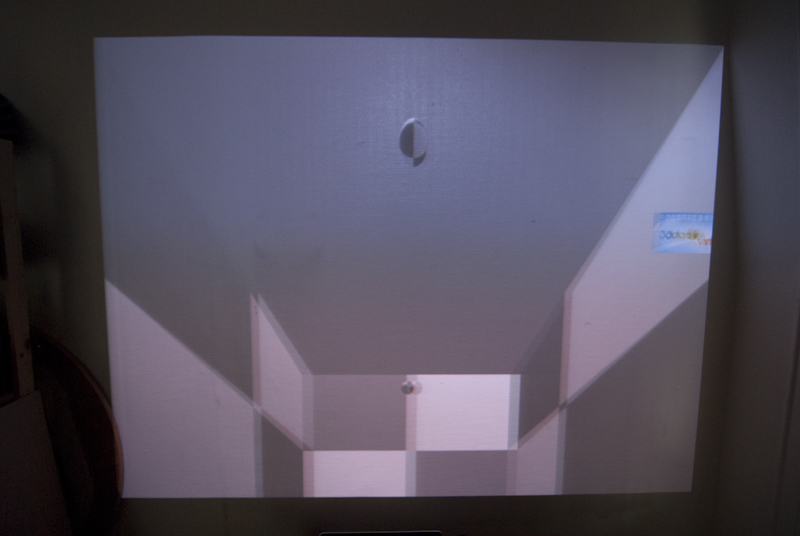

See the big ball ? It has two images, which means that the convergence is not right ! The object is too far away, so by increasing the convergence, I will bring it closer until it has only one picture.

Now that’s better. For more precision, make sure that the center of the ball has zero parallax.

Calibrating the inter-eye distance

Now what about the inter-eye distance ?

(This is also very empirical, so if you see a flaw let me know!)

If you look again at the Figure 1 above, you’ll notice that if we place an object infinitely far away, its parallax should be exactly the distance between the eyes.

I wanted to try another method :

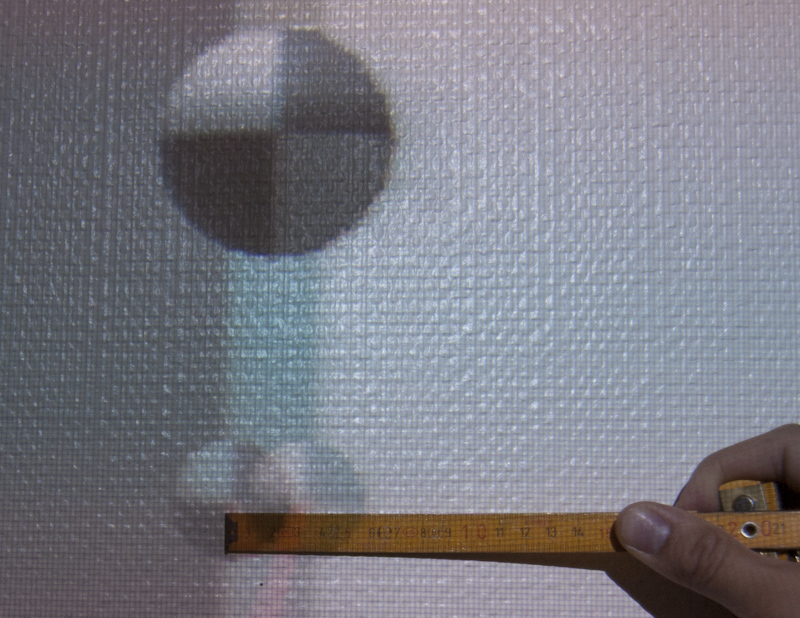

Suppose I place an object at the same distance from the screen than my eyes. By using Thales’s theorem : p / IED = d /2*d

So the parallax of this object gives me half the inter-eye distance.

By changing the “Depth amount” of the 3D Vision driver you change the inter-eye distance until the measured parallax is 6.5cm / 2 = 3.25 cm :

(notice my awful wallpaper and the big size of the pixels…)

I had to push the depth amount to nearly the maximum to get this separation.

Checking the calibration

One great way to check your calibration is simply to place a virtual object that has the same shape and the same position as a real object.

Since we’re trying to achieve a one to one mapping from the real world to the virtual world, the two objects should match perfectly.

Actually it’s exatly the way that car manufacturer are calibrating their cave, with a big physical cube. The depth perception for them is critical, so with this method they’re both checking the cave configuration and the inter-eye distance of a specific user.

I’ve tried to do that, and within a few centimeters (for a box of 33cm deep stuck to the wall), the real and the virtual box approximately match.

I’ve reached the Gametrak’s limits in terms of precision. Hopefully it will be more relevant with a better tracking.

But all in all, it seems the calibration is not so bad!

Issues

Unfortunately, some non negligeable issues remain :

– If you have followed closely, you’ll wonder what happen when you move your head closer or farther away. You’re right, we should modify the convergence distance of the 3D Vision drivers dynamically ! I have yet to see if that’s at all possible other than with the keyboard shortcut.

– Another problem is that the 3d Vision driver assumes that your eyes stay horizontal and parallel to the screen. You can’t bend and you can’t look from the sides, otherwise the stereo will be wrong. It may be possible to diminish this problem by reducing the inter-eye distance when we detect the user is bending or rotating.

Conclusion

The 3D Vision driver is meant to transform any 2D Game into stereoscopic 3D. It assumes that the player stays static in front of his screen, and that he adjusts the inter-eye distance based on his comfort and not to have a realistic stereo.

After some calibration it’s possible to get close to an interesting solution for a homebrew VR setup. If you don’t need precise depth and size estimation, it will probably be good enough for you.

But if you want perfect stereo and head tracking, the easiest way is still to use a Quadro, because it gives you a real quadbuffer where you can compute yourself the correct perspective for both eyes !

oh..This needs so much of work..? and thanx for the info..

In fact there are only 2 parameters to configure ‘Depth Amount’ and ‘Convergence’.

And I’m just trying to do it right to have a perfectly calibrated VR setup.

If you don’t calibrate anything you’ll probably don’t really notice anything wrong.

A good calibration is important to correctly perceive objects’ size and distance.

Thanks, Sebestain for the great info.

Sujeet